From static forms to context-aware interfaces

Most digital systems rely on predefined user interfaces: developers decide what users can input, and how. While effective for stable processes, such designs are rigid when user needs evolve dynamically.

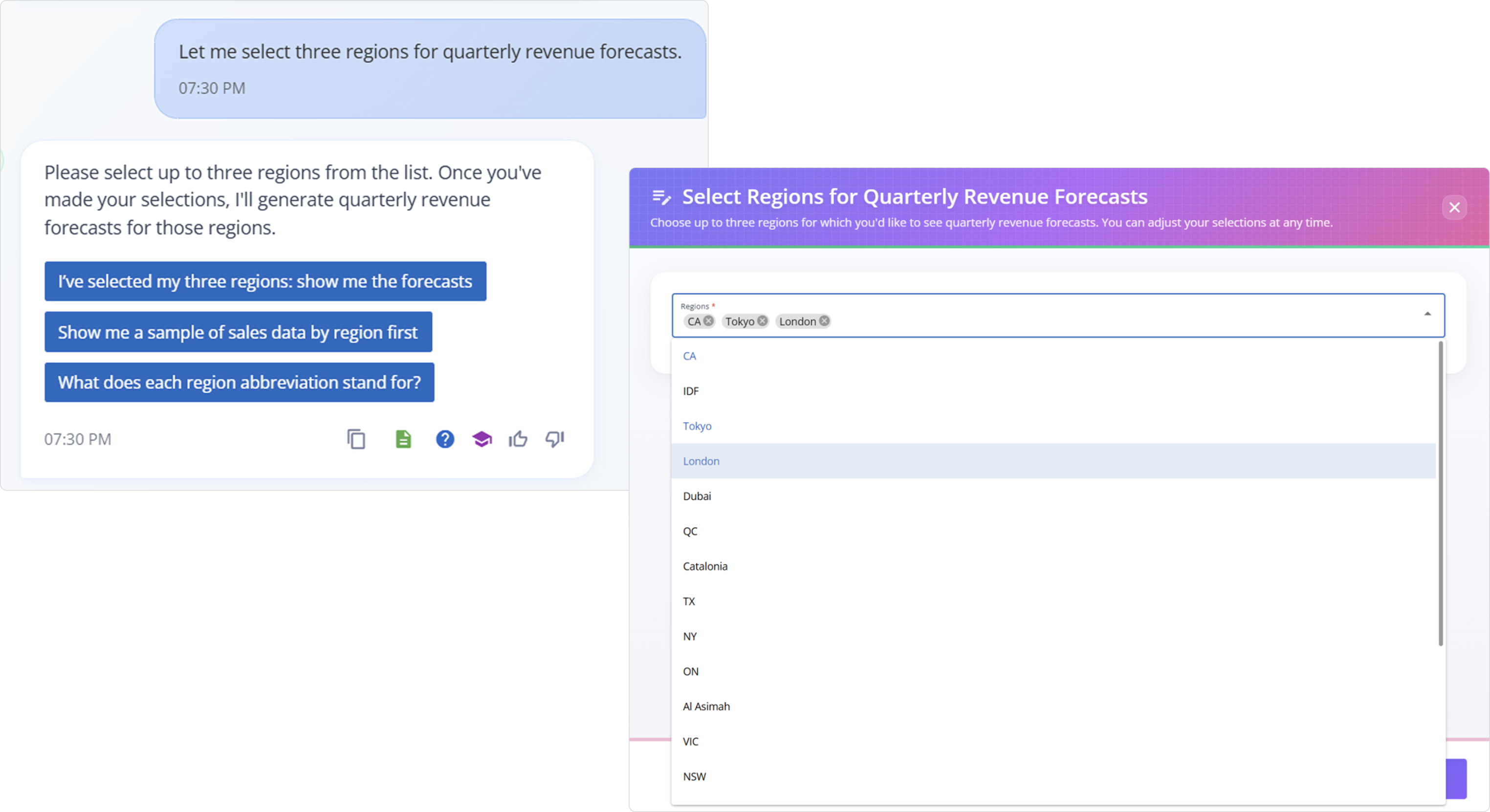

Generative models, however, can now synthesize interface specifications from user intent. A simple instruction, such as: let me select three regions for quarterly revenue forecasts.

It can be converted into a structured schema, defining fields, data types, and layouts, without human intervention. This capability allows systems to generate or modify forms in real time, tailoring them to each interaction context.

One can mention the names of these regions verbally, but they first need to obtain the list by asking AI. But by asking the AI to generate a form, they achieve both objectives at once! Getting the list and providing a structured way to select or input the regions.

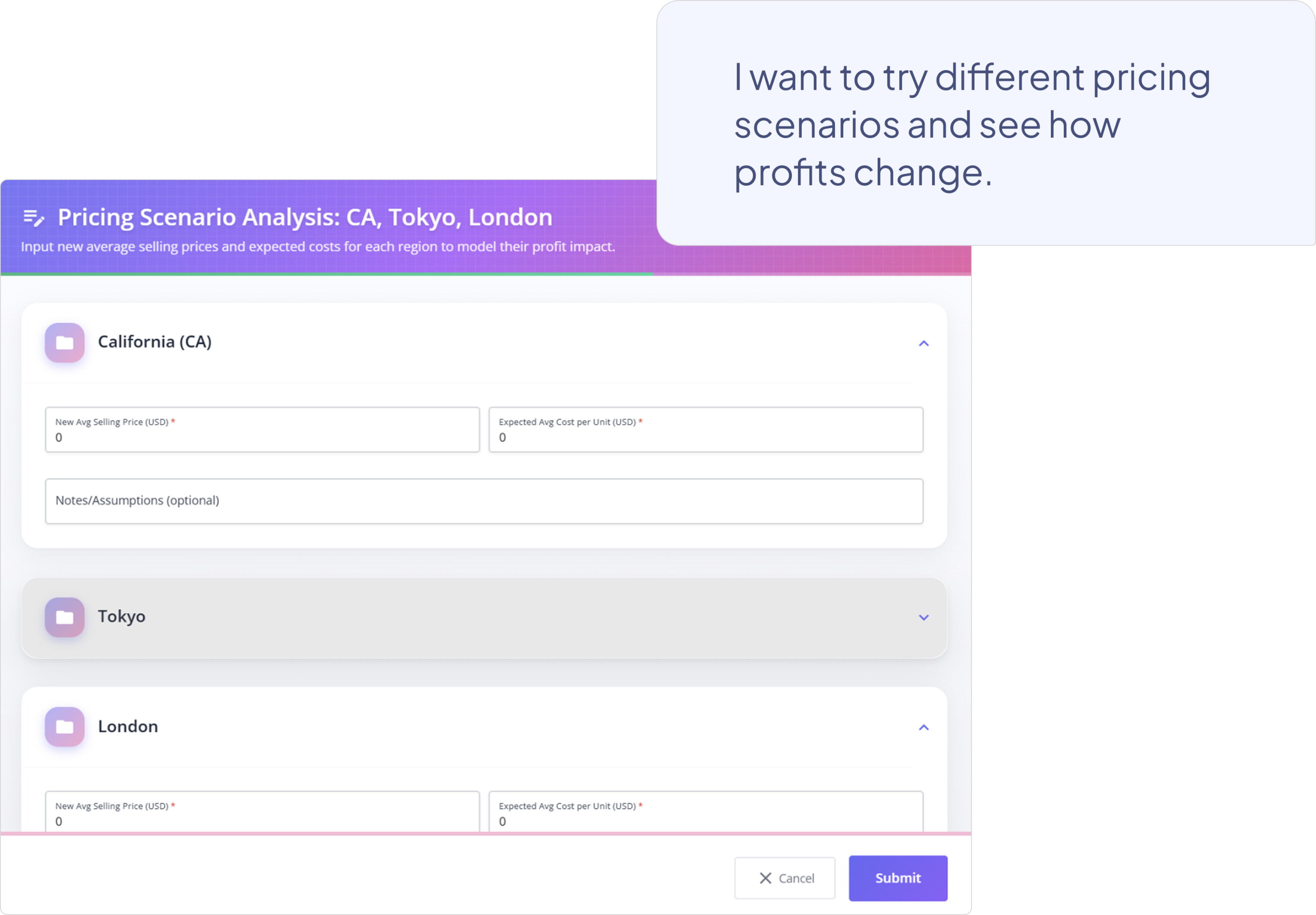

This also eliminates guessing. For instance, a user might say Canada, Tokyo, and London, while the dataset contains CA, Tokyo, and London. In such cases, the AI could miss CA because it doesn’t match the spoken name exactly. By requesting a form instead, we ensure precise alignment between user input and dataset values, delivering exactly what we want.

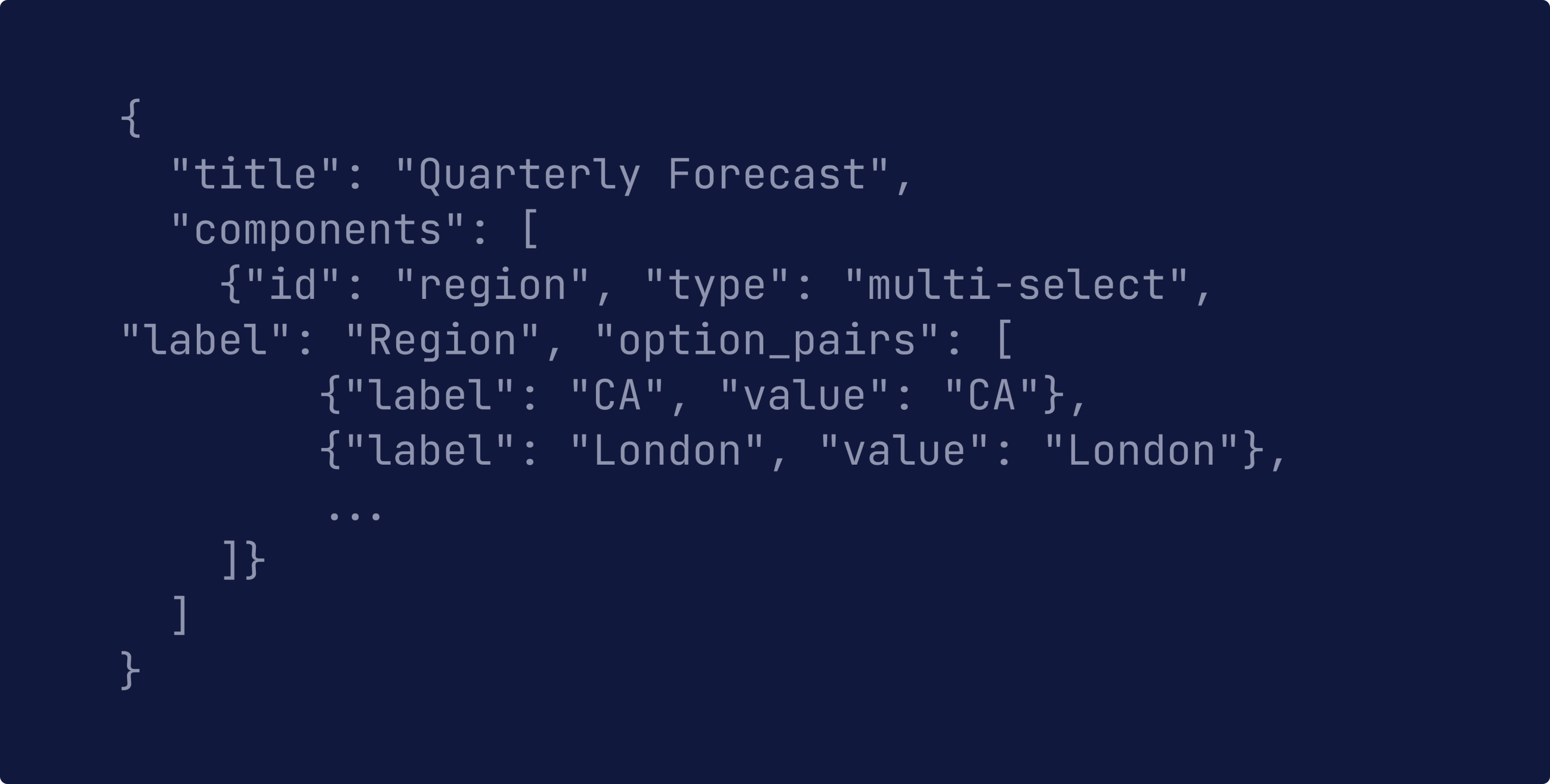

Structured schema for dynamic forms

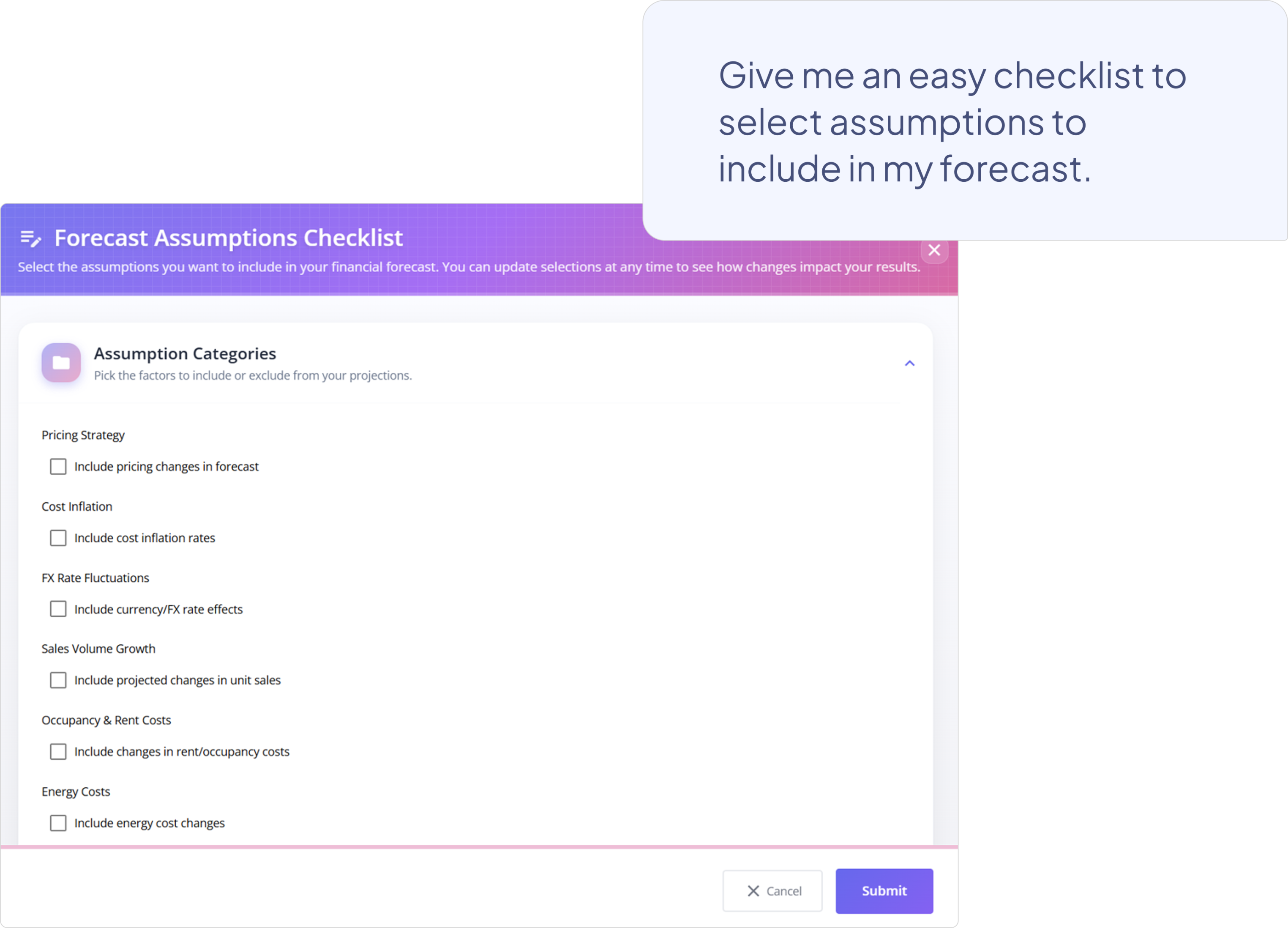

A structured schema is the essential building block of a user interface in a machine-interpretable structure. It specifies components (such as text inputs, dropdowns, and checkboxes), their relationships, layout constraints, and validation logic.

For example, a structured schema might describe:

A generative system interprets this structure to render a functional form instantly, ready for user input, without manual UI design. This mechanism serves as the foundation for adaptive data collection and structured decision workflows.

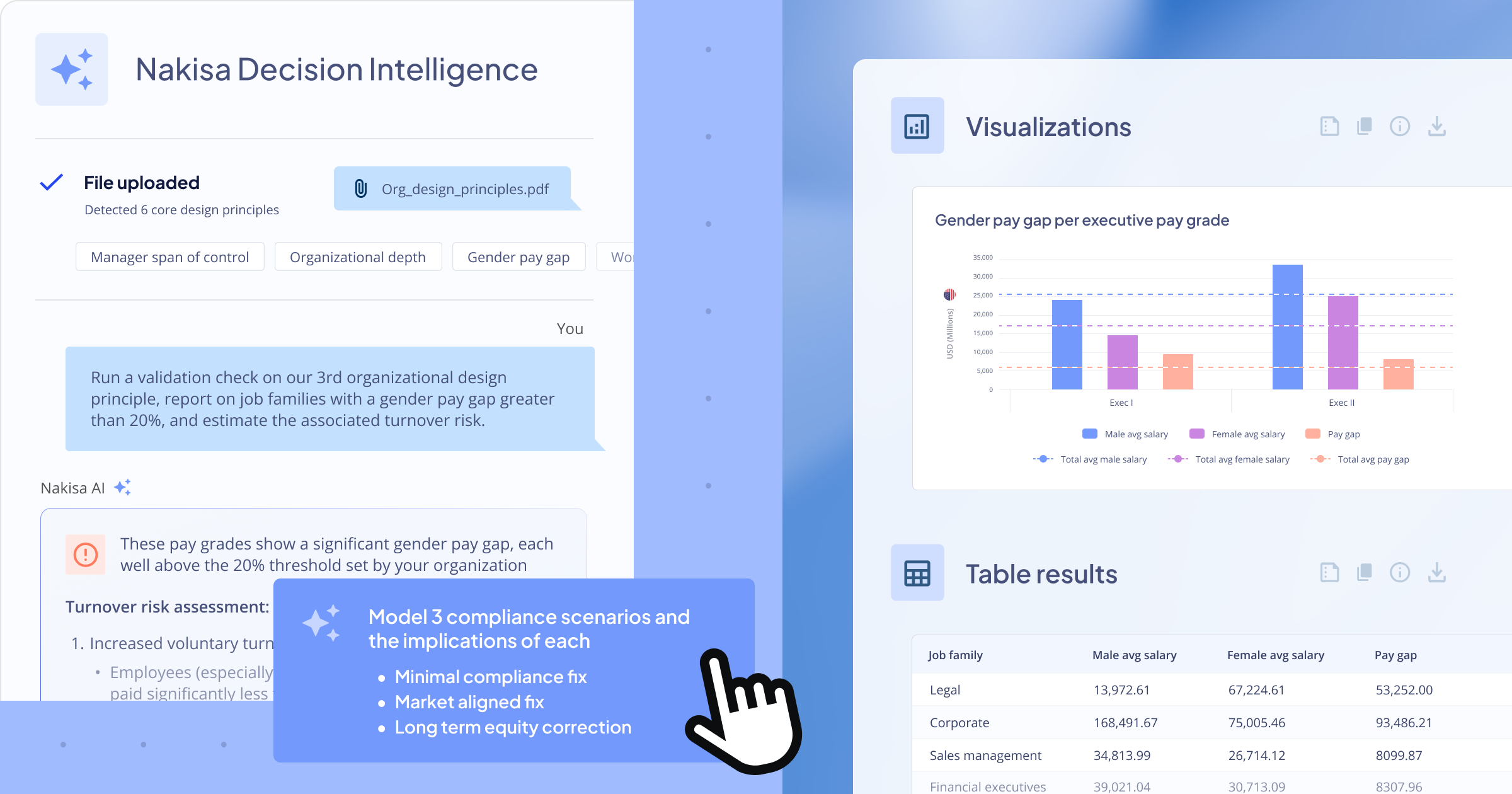

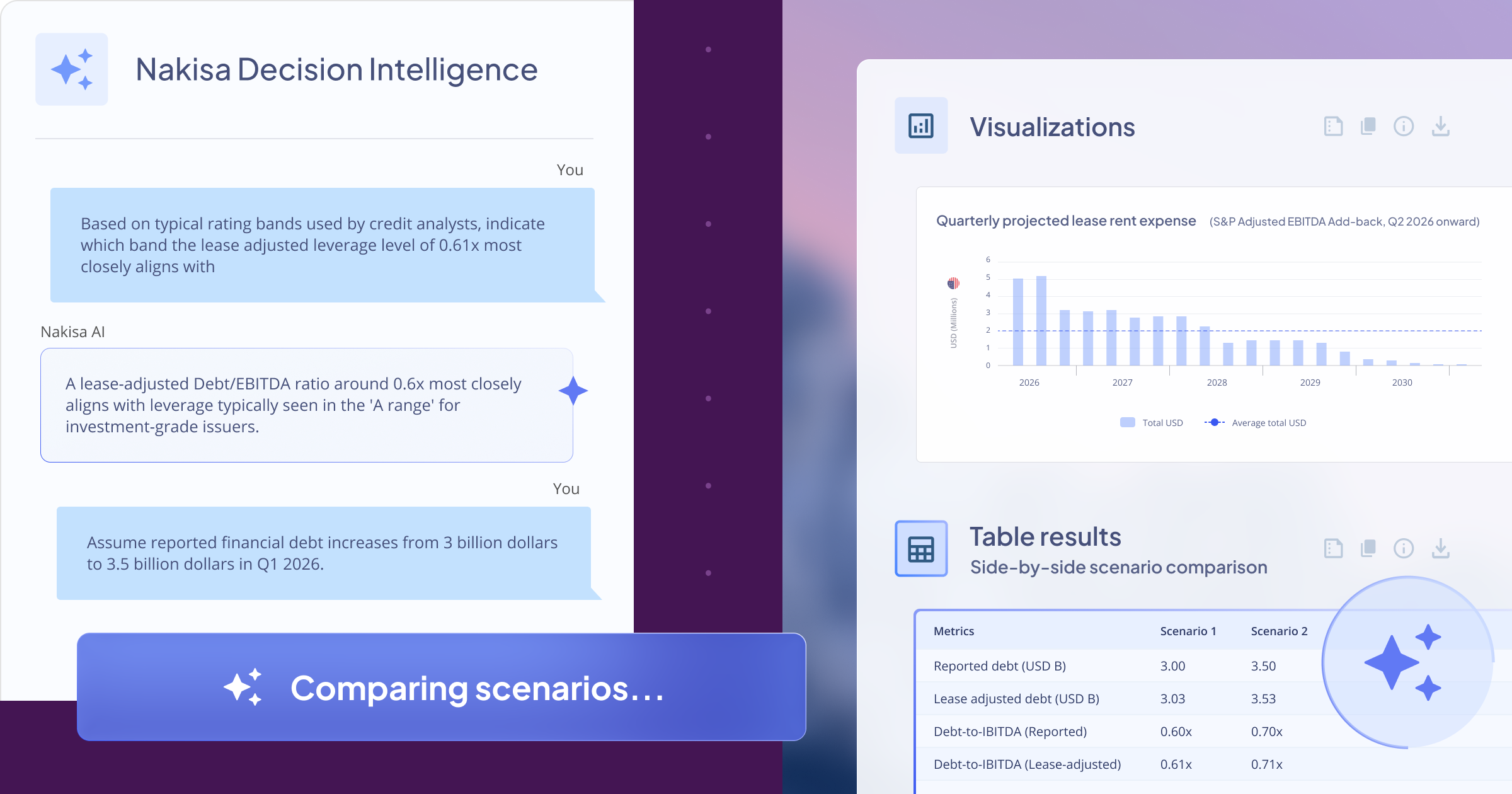

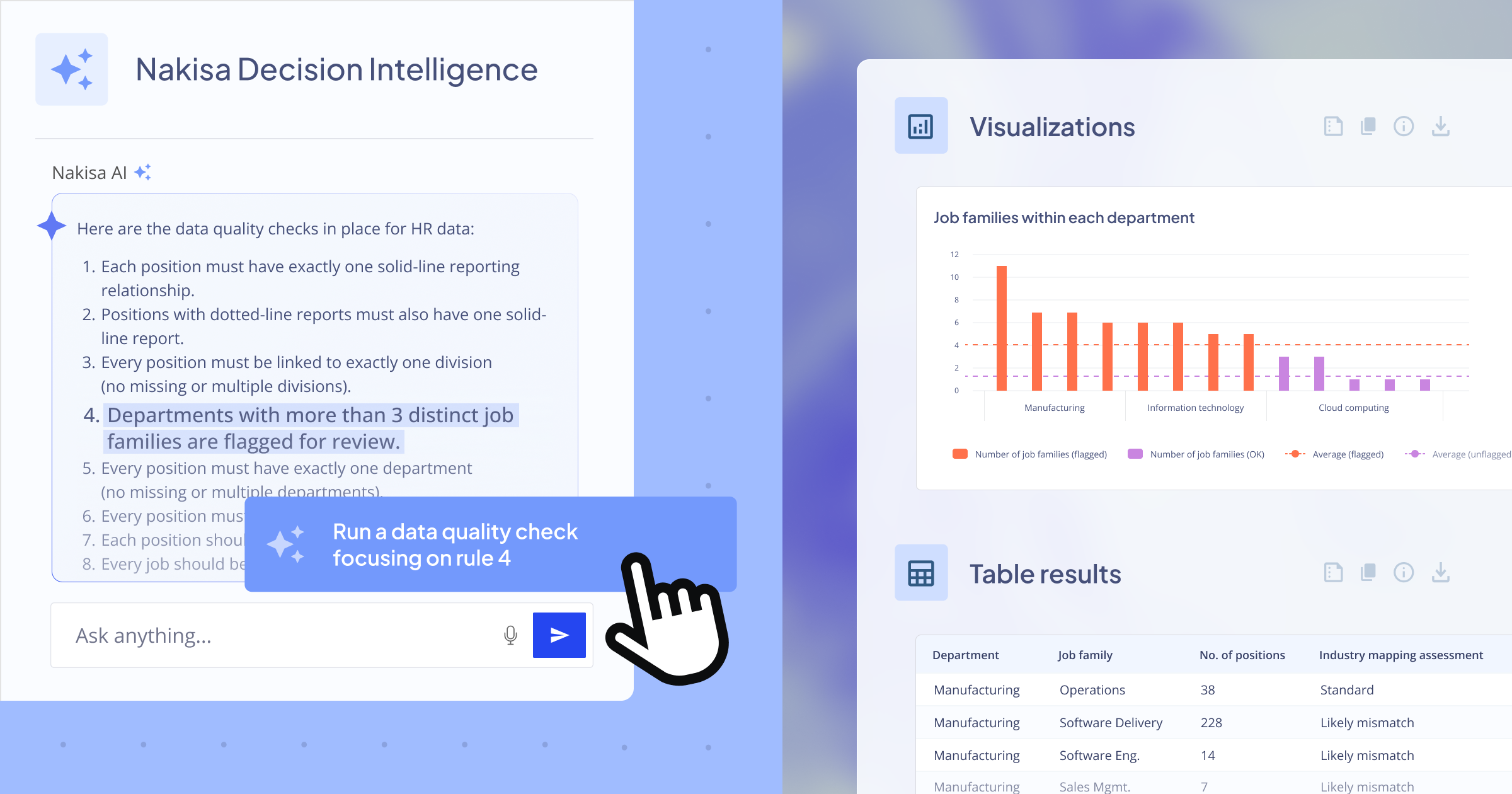

Integrating generative intelligence with decision systems

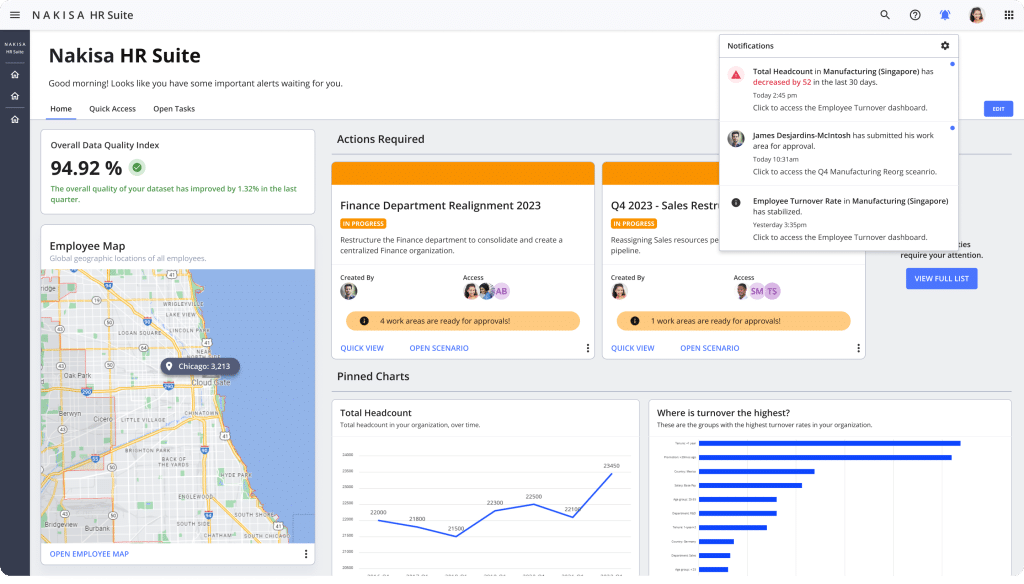

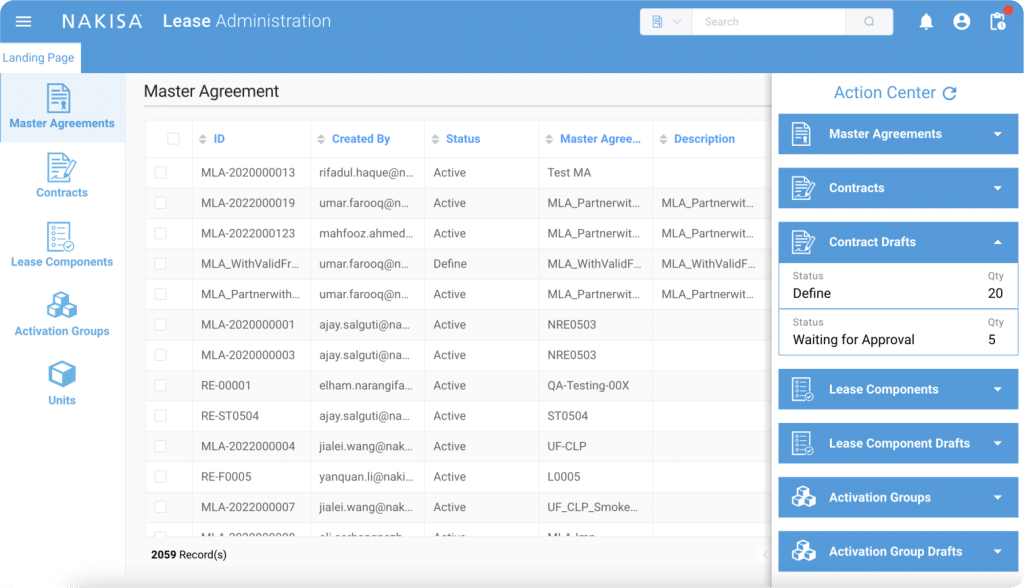

When integrated into a decision intelligence framework, such as Nakisa Decision Intelligence (NDI), the form generation capability extends beyond UI convenience.

It supports:

- Structured reasoning: ensuring user inputs align with decision models and data validation.

- Semantic precision: resolving differences between how users express information (e.g., saying “Canada, Tokyo, and London”) and how it appears in data (CA, Tokyo, London), ensuring exact matches and eliminating ambiguity.

- Adaptive workflows: dynamically requesting only the information relevant to the context.

- Consistency and traceability: maintaining standardized data formats across autonomous decisions.

NDI’s approach uses a powerful generative layer to interpret user objectives and translate them into functional artifacts. Ranging from analytical tables and scenarios to structured forms. The result is a system that can generate not only insights but also the interfaces needed to capture context for those insights.

Productivity through generative interaction

Dynamic UIs can meaningfully improve productivity across analytical, managerial, and planning workflows. Let's have a look at some examples:

I want to try different pricing scenarios and see how profits change.

Create a quick table to input growth rates for different regions.

Make a quick slider-based input to test sensitivity to interest rate changes.

Give me an easy checklist to select assumptions to include in my forecast.

Such interfaces reduce cognitive load. Users interact in natural language, while the system manages structure and compliance transparently. This fosters fluid collaboration between human intuition and computational logic.

Implications and future directions

Dynamic interface generation highlights a broader trend: AI systems are no longer limited to reasoning over data; they can now construct the interaction environment itself.

This raises important research directions:

- Schema grounding: mapping language to structured schemas with semantic consistency.

- Context persistence: retaining interface state across multi-turn interactions.

- Human-in-the-loop design: ensuring interpretability and transparency in generated UIs.

- Standardization: evolving common formats for cross-system interoperability.

These are not product-specific ambitions but broader industry challenges. Combining cognitive modeling, information architecture, and applied AI design.

Conclusion

Dynamic UI generation exemplifies how generative AI extends its utility beyond content creation into interaction synthesis. By connecting intent understanding with structured form generation, systems like Nakisa Decision Intelligence (NDI) demonstrate a glimpse of how adaptive, context-aware decision environments may function in the near future.

Rather than replacing design, such systems complement human reasoning, automating structure while leaving meaning to the user. The result is not just efficiency, but a more fluent dialogue between humans and intelligent systems.

You can request a demo of NDI here, or reach out to your dedicated Client Success Manager to access the preview environment. I often write about AI and Nakisa innovations. Connect with me on LinkedIn for the latest updates!