Challenge of hallucination

LLMs excel at contextual reasoning and intuitive understanding. They can interpret intricate questions, spot relationships, and generate meaningful insights from unstructured text.

But when it comes to facts, math, or deterministic accuracy, they sometimes “hallucinate,” producing responses that sound right but are actually wrong.

This is not a flaw of intelligence; it’s a result of how these models work. They predict the most likely sequence of words, not the true one.

For businesses relying on LLMs for decisions, compliance, or analytics, hallucination is not a tolerable risk. It’s a barrier to trust. This amplifies when business data, that is not part of "world knowledge", is exposed to a private LLM.

Solution: pairing intuition with determinism

The key to overcoming hallucination isn’t to limit LLMs, it’s to complement them.

By combining LLM intuition with deterministic systems, we can create hybrid architectures that offer both creativity and correctness.

Take any LLM as an example: when a task requires numerical precision, it can hand off the work to a Python execution layer. This ensures that while the model interprets the problem, the math itself is done flawlessly by code.

This orchestration allows the system to decide dynamically:

- When intuition is enough (e.g., writing, reasoning, planning).

- When precision is mandatory (e.g., calculations, data retrieval, analytics).

LLMs have reached a point where they can be prompted to reliably determine whether a task requires intuition or precision. We, humans, are brilliant at both, and we use tools to our aid, such as digital devices (phones, computers, calculators) and programs running on those devices.

LLMs can achieve remarkable results when given the same choice. When we empower LLMs to decide how to think and when to use the right tools, fascinating and highly practical behaviors emerge.

The art lies in building the right architecture that enables LLMs to perform, along with guardrails that deterministically detect when they go off course.

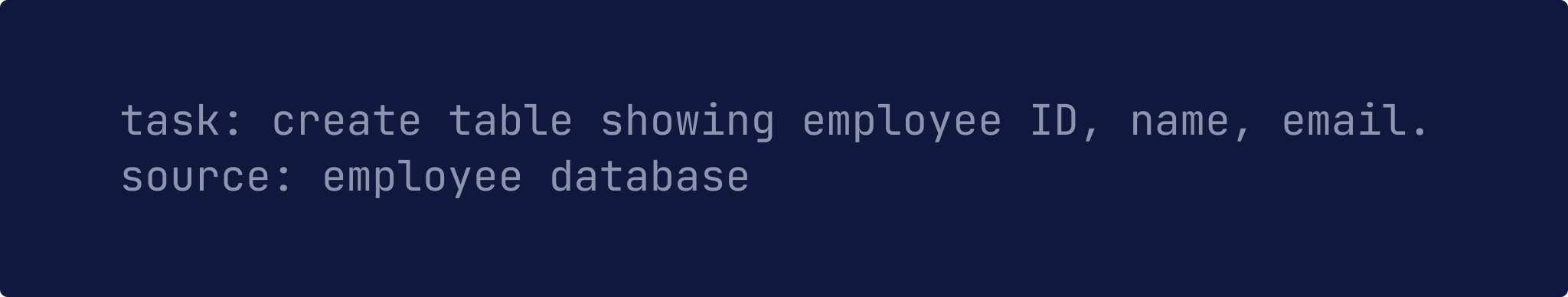

From language to executable specifications

The most powerful application of this principle lies in translating natural language into executable specifications. Instructions that machines can run without ambiguity.

For instance:

An LLM understands the user’s intent, but instead of generating a table itself, it can translate the request into an executable specification. A deterministic tool then executes it perfectly.

This combination eliminates the risk of hallucination while preserving the user’s ability to interact in plain language. It also provides full transparency. Users can see exactly what was executed. Even if the generated executable specification partially misunderstands their intent, they still know precisely what was done and how it was done.

An important aspect to understand is the difference between programming languages and executable specifications. While programming languages define how to perform a task through explicit, step-by-step instructions, executable specifications describe what needs to be achieved in a structured, intent-driven way (declarative in nature). In fact, executable specifications can themselves be formal languages! Defined by deterministic grammars, as seen in compiler construction. This enables both precise interpretation and reliable execution.

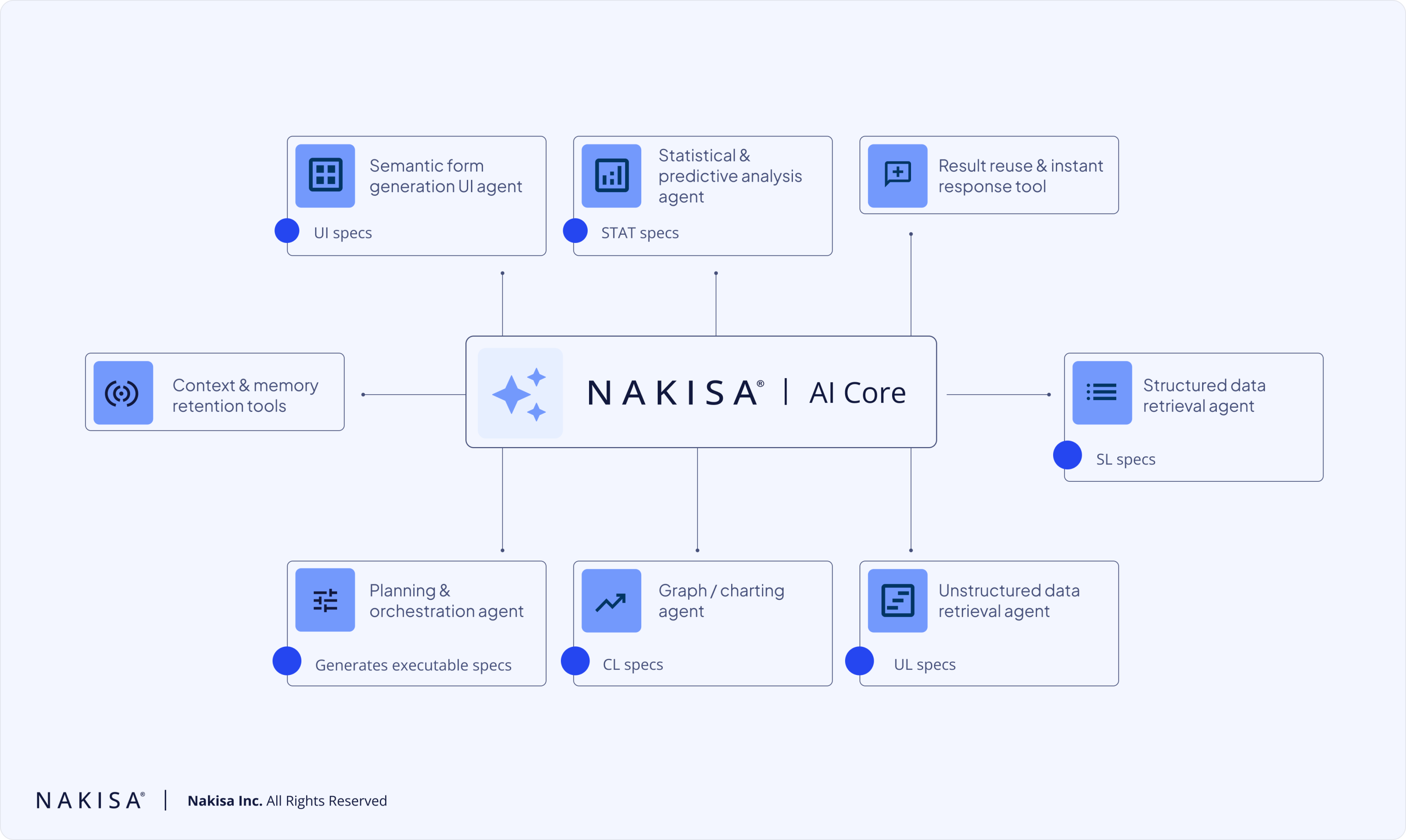

Intelligent orchestration in practice

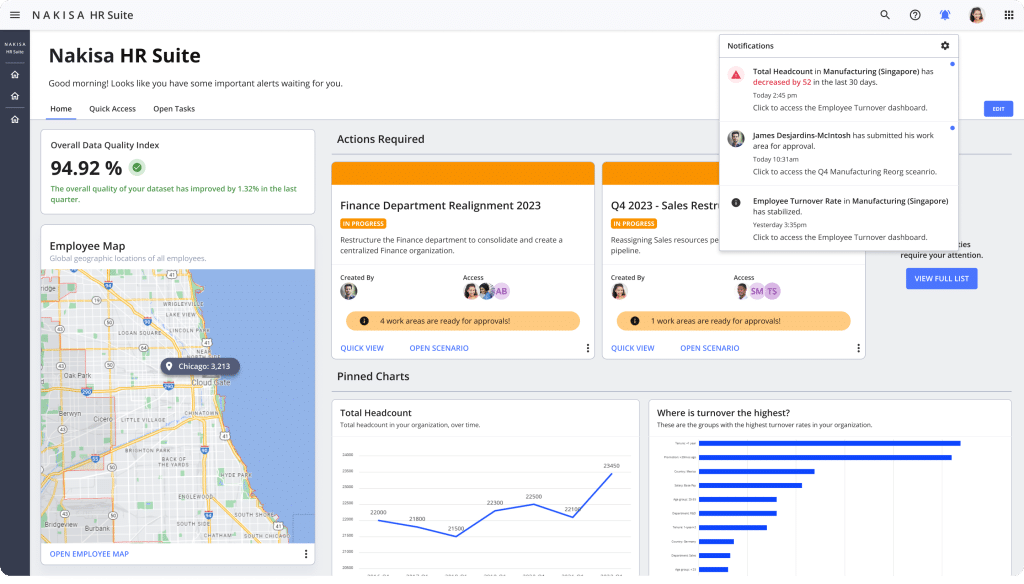

This is more than theory; it’s becoming a defining technique in Nakisa Labs to build deterministic GenAI-powered systems.

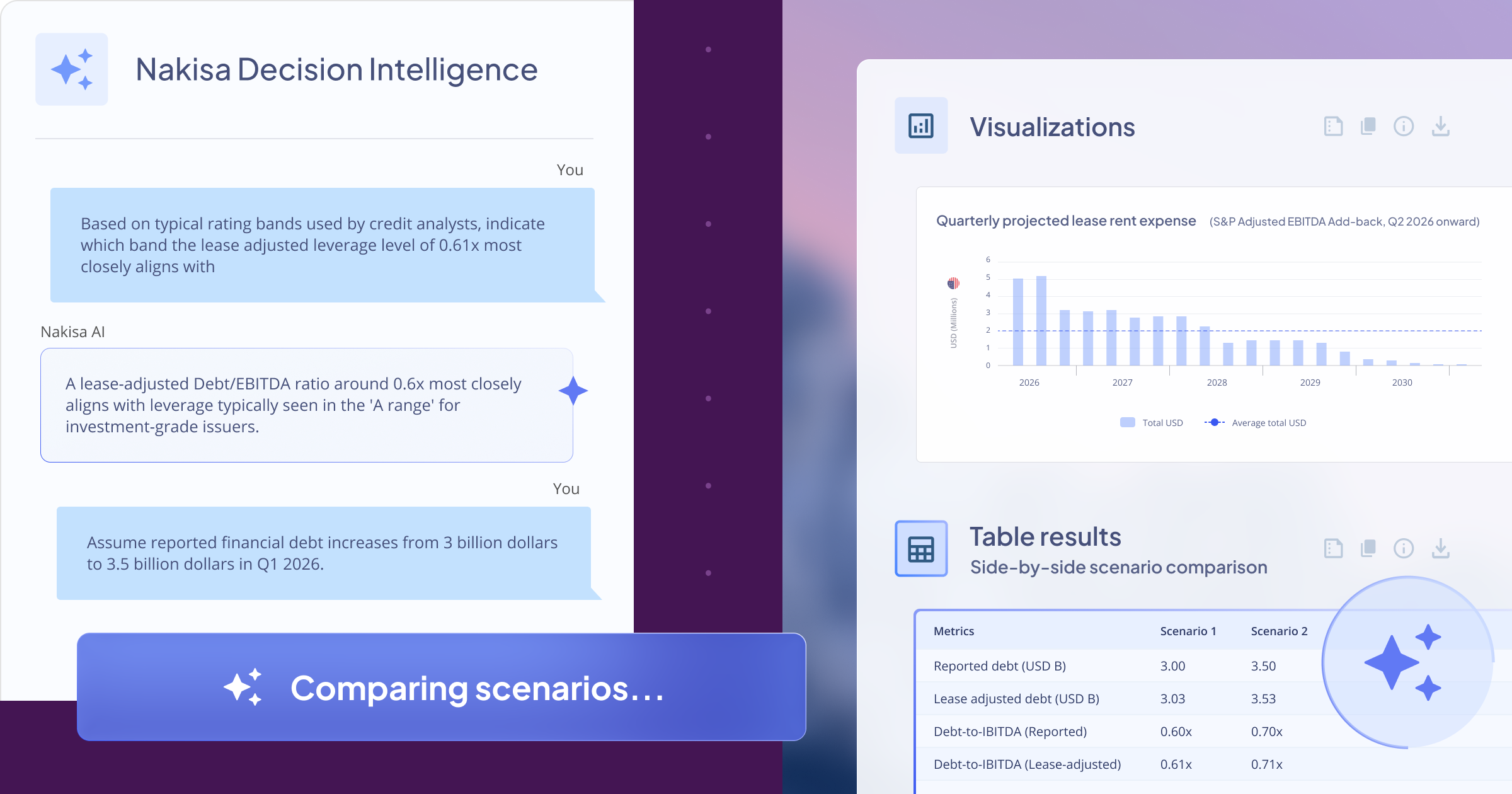

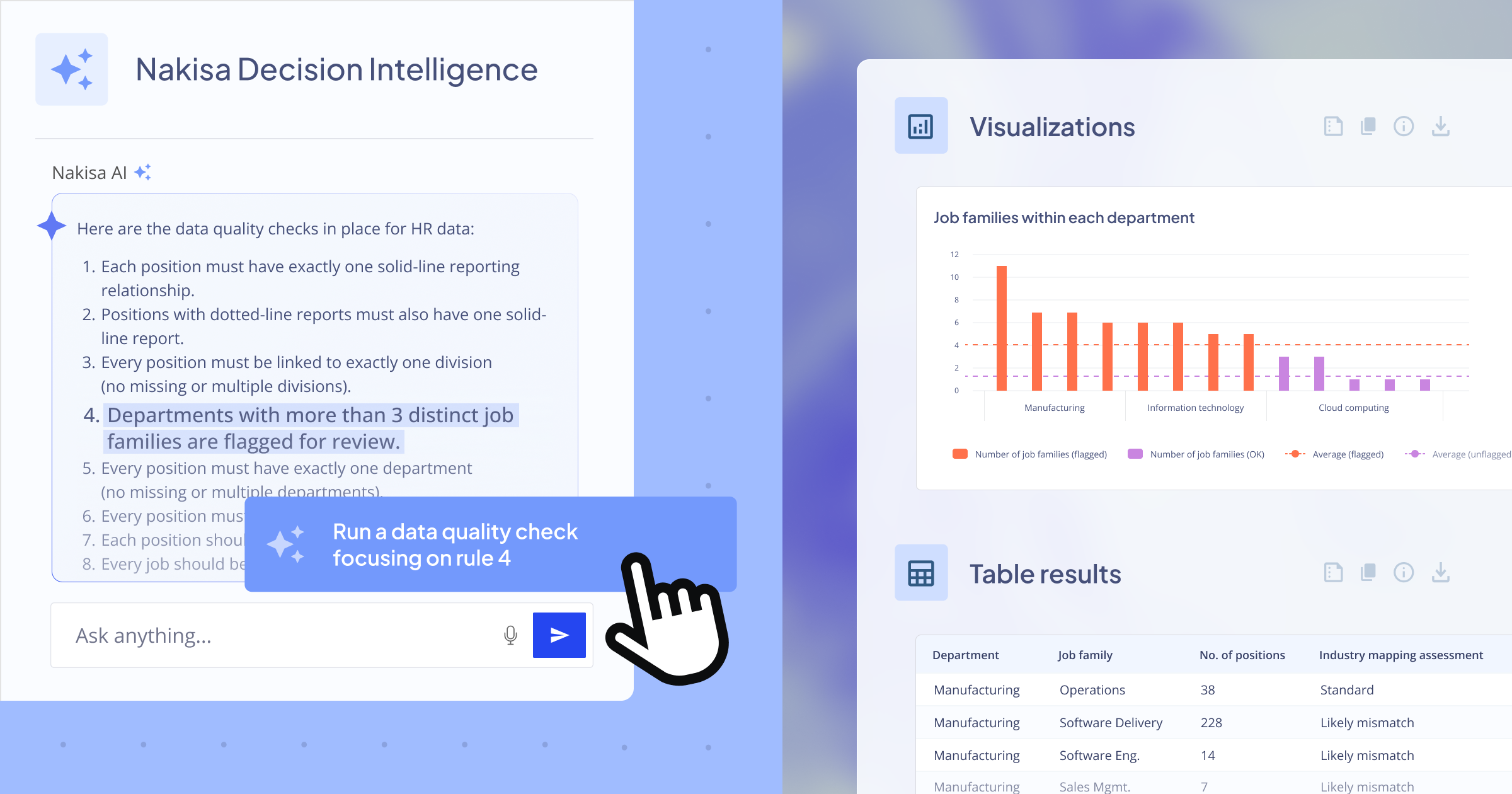

In Nakisa Decision Intelligence, for example, LLMs are being orchestrated with deterministic analytics engines.

The LLM layer interprets business context and human intent, identifying what decision a user is trying to make.

Then, deterministic modules execute structured, executable specifications that ensure accuracy, precision, traceability, and compliance.

The result is an intelligent ecosystem where:

- LLMs provide context, reasoning, and intuition.

- Deterministic tools ensure mathematical and operational precision.

This "division of cognitive labor" minimizes hallucinations while empowering users to ask complex, contextual questions in natural language and receive answers they can both trust and investigate. Investigate here means users aren’t limited to taking outputs at face value; they can trace how each answer was derived, review the underlying executable specifications, and validate every step of the reasoning or computation. This transparency transforms AI from a black box into an explainable, auditable system.

Outcome: the best of both worlds

By blending human-like understanding with machine-level accuracy, we achieve systems that are:

- Trustworthy: grounded in verifiable results.

- Robust: resilient to human error or language ambiguity.

- Empowering: allowing anyone to query data, design workflows, or simulate outcomes naturally.

This approach represents the next evolution of AI: not replacing human reasoning, but extending it with a foundation of deterministic precision.

Summary

The future of AI lies not in choosing between intuition and precision, but in harmonizing them.

As organizations embrace hybrid systems where LLMs orchestrate deterministic engines, we edge closer to an era of trustworthy, explainable, and intelligent automation.

That’s how we bridge the gap between imagination and accuracy.

You can request a demo of NDI here, or reach out to your dedicated Client Success Manager to access the preview environment. I often write about AI and Nakisa innovations. Connect with me on LinkedIn for the latest updates!